Merge (usually capitalized) is one of the basic operations in the Minimalist Program, a leading approach to generative syntax, when two syntactic objects are combined to form a new syntactic unit (a set). Merge also has the property of recursion in that it may be applied to its own output: the objects combined by Merge are either lexical items or sets that were themselves formed by Merge. This recursive property of Merge has been claimed to be a fundamental characteristic that distinguishes language from other cognitive faculties. As Noam Chomsky (1999) puts it, Merge is "an indispensable operation of a recursive system ... which takes two syntactic objects A and B and forms the new object G={A,B}" (p. 2).[1]

YouTube Encyclopedic

-

1/1Views:2 340

-

Talk on Sentic Patterns by Prof. Erik Cambria (NTU SCSE)

Transcription

Mechanisms of Merge

Within the Minimalist Program, syntax is derivational, and Merge is the structure-building operation. Merge is assumed to have certain formal properties constraining syntactic structure, and is implemented with specific mechanisms. In terms of a merge-base theory of language acquisition, complements and specifiers are simply notations for first-merge (read as "complement-of" [head-complement]), and later second-merge (read as "specifier-of" [specifier-head]), with merge always forming to a head. First-merge establishes only a set {a, b} and is not an ordered pair. In its original formulation by Chomsky in 1995 Merge was defined as inherently asymmetric; in Moro 2000 it was first proposed that Merge can generate symmetrical structures provided that they are rescued by movement and asymmetry is restored [2]For example, an {N, N}-compound of 'boat-house' would allow the ambiguous readings of either 'a kind of house' and/or 'a kind of boat'. It is only with second-merge that order is derived out of a set {a {a, b}} which yields the recursive properties of syntax. For example, a 'House-boat' {house {house, boat}} now reads unambiguously only as a 'kind of boat'. It is this property of recursion that allows for projection and labeling of a phrase to take place;[3] in this case, that the Noun 'boat' is the head of the compound, and 'house' acting as a kind of specifier/modifier. External-merge (first-merge) establishes substantive 'base structure' inherent to the VP, yielding theta/argument structure, and may go beyond the lexical-category VP to involve the functional-category light verb vP. Internal-merge (second-merge) establishes more formal aspects related to edge-properties of scope and discourse-related material pegged to CP. In a Phase-based theory, this twin vP/CP distinction follows the "duality of semantics" discussed within the Minimalist Program, and is further developed into a dual distinction regarding a probe-goal relation.[4] As a consequence, at the "external/first-merge-only" stage, young children would show an inability to interpret readings from a given ordered pair, since they would only have access to the mental parsing of a non-recursive set. (See Roeper for a full discussion of recursion in child language acquisition).[5] In addition to word-order violations, other more ubiquitous results of a first-merge stage would show that children's initial utterances lack the recursive properties of inflectional morphology, yielding a strict Non-inflectional stage-1, consistent with an incremental Structure building model of child language.[6]

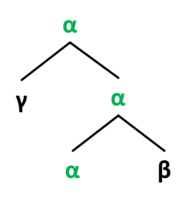

Binary branching

Merge takes two objects α and β and combines them, creating a binary structure.

Feature checking

In some variants of the Minimalist Program Merge is triggered by feature checking, e.g. the verb eat selects the noun cheesecake because the verb has an uninterpretable N-feature [uN] ("u" stands for "uninterpretable"), which must be checked (or deleted) due to full interpretation.[7] By saying that this verb has a nominal uninterpretable feature, we rule out such ungrammatical constructions as *eat beautiful (the verb selects an adjective). Schematically it can be illustrated as:

Strong features

There are three different accounts of how strong features force movement:[8][9]

1. Phonetic Form (PF) crash theory (Chomsky 1993) is conceptually motivated. The argument goes as follows: under the assumption that Logical Form (LF) is invariant, it must be the case that any parametric differences between languages reduce to morphological properties that are reflected at PF (Chomsky 1993:192). Two possible implementations of the PF crash theory are discussed by Chomsky:

- if a strong feature is visible at PF after Spell-out, because it is not a legitimate PF object, the derivation crashes (Chomsky 1993:198);

- PF rules do not apply to undeleted strong features, and so the derivation crashes (Chomsky 1993:216)

PF crash theory: A strong feature that is not checked in overt syntax causes a derivation to crash at PF.

2. Logical Form (LF) crash theory (Chomsky 1994) is empirically motivated by VP ellipsis.

LF crash theory: A strong feature that is not checked (and eliminated) in overt syntax causes a derivation to crash at LF.

3. Immediate elimination theory ((Chomsky 1995))

Virus theory: A strong feature must be eliminated (almost) immediately upon its introduction into the phrase marker; otherwise, the derivation cancels.

- Pseudogapping and sluicing, the two ellipsis components, verify the relevance of strong features provided by Chomsky (1993).

- The usage of a strong feature enables movement or ellipsis to save a derivation.

- Raising that is prompted by strong features generate ellipsis.

- Strong features include the Extended Projection Principle and the split VP hypothesis.

- PF crash theory is pertinent through direct or indirect mechanisms.

- Strong features prompt overt movement. Under the PF crash theory, all of the constituents, not just formal features, must move by means of pied-piping.

Constraints

Initially, the cooperation of Last Resort (LR) and the Uniformity Condition (UC) were the indicators of the structures provided by Bare Phrase which contain labels and are constructed by move, as well the impact of the Structure Preservation Hypothesis.[10]

- The Uniformity Condition, with respect to the phrase structure status, is an unchanging chain. It has three functions that contributes to the idea of movement:[10]

- It obstructs the movement of a minimal non maximal projection to a specifier.

- It obstructs the covert movement of formal features (FF) to a specifier.

- It averts a moved non minimal projection from projecting further subsequent to merging with its target.

- C-command: In order to maintain the desired simplicity of the Minimalist Program, c-command gains it recognition as being more optimal, and therefore replaces the Uniformity Condition for two reasons:

- Methodologically, the majority of existing relations contain C-command as its foundation.

- The condition of c-command on chain links posits a restriction regarding the movement of intermediate projections, unlike the Uniformity Condition.

- Last Resort is a property of Move: feature may move to its target only if the moved feature enters a checking relation with a feature of the head it is moving to.[10] For example, D may move to SPEC C, only if the maximal projection and its subsequent projection select for D.[11]

- The Minimal Link Condition (MLC) is a second important property of Move. According to the MLC, a feature can only move to its target if there is no prior feature that is able to move under the Last Resort property and is closer to the target than the original feature. (e.g. C cannot move to target A if B obeys the Last Resort and is closer to target A than C).[11]

Projection and labeling

When we consider the features of the word that provide the label when the word projects, we assume that the categorical feature of the word is always among the features that become the label of the newly created syntactic object.[12] In this example below, Cecchetto demonstrated how projection selects a head as the label.

In this example by Cecchetto (2015), the verb "read" unambiguously labels the structure because "read" is a word, which means it is a probe by definition, in which "read" selects "the book". the bigger constituent generated by merging the word with the syntactic objects receives the label of the word itself, which allow us to label the tree as demonstrated.

In this tree, the verb "read" is the head selecting the DP "the book", which makes the constituent a VP.

No tampering condition (NTC)

Merge operates blindly, projecting labels in all possible combinations. The subcategorization features of the head act as a filter by admitting only labelled projections that are consistent with the selectional properties of the head. All other alternatives are eliminated. Merge does nothing more than combine two syntactic objects (SO’s) into a unit, but does not affect the properties of the combining elements in any way. This is called the No Tampering Condition (NTC). Therefore, if α (as a syntactic object) has some property before combining with β (which is likewise a syntactic object) it will still have this property after it has combined with β. This allows Merge to account for further merging, which enables structures with movement dependencies (such as wh-movement) to occur. All grammatical dependencies are established under Merge: this means that if α and β are grammatically linked, α and β must have merged.[13]

Bare phrase structure (BPS)

A major development of the Minimalist Program is Bare Phrase Structure (BPS), a theory of phrase structure (structure building operations) developed by Noam Chomsky in 1994.[14] BPS is a representation of the structure of phrases in which syntactic units are not explicitly assigned to categories.[15] The introduction of BPS moves the generative grammar towards dependency grammar (discussed below), which operates with significantly less structure than most phrase structure grammars.[16] The constitutional operation of BPS is Merge. Bare phrase structure attempts to: (i) eliminate unnecessary elements; (ii) generate simpler trees; (ii) account for variation across languages.[17]

Bare Phrase Structure defines projection levels according to the following features:[10]

- X+max is the maximal projection; the lexical category cannot project to any further point in the tree

- X+min is the minimal projection, and corresponds to the lexical item without any of its associated projects (if any)

- X-max,-min lies between minimal and maximal projection( this corresponds to the intermediate projection level in X-bar Theory)

- the complement position is the sister to X+min

- the specifier position is the sister to the intermediate projection X-max,-min

|

|

|

| Maximal Projection | Minimal Projection | Intermediate Projection |

Fundamental properties

The minimalist program brings into focus four fundamental properties that govern the structure of human language:[18][19]

- Hierarchical structure is modelled by context-free grammar plus a lexicon. Together they express three irreducible structural properties of all human languages: dominance (constituents are organized in a hierarchical fashion); labelling (constituents differ in type, i.e. category); linear precedence (constituents are ordered relative to each other). The labelled hierarchical structure along with the string of elements linearly ordered are conveyed by phrase structure grammar that generates a set of P-markers. This captures the fact that the building blocks of human language are constituents, which are the basis for phrase structure. The claim that language exhibits hierarchical structure can be traced back to the discovery procedures of structural linguistics, in the form of Immediate Constituent (IC) analysis, developed in 1947. Although generative grammar is not procedural, it nevertheless incorporates from immeidate constituent analysis the insightful concept of 'ordered rewriting rules', which form the basis of phrase structure grammar. This theory is developed with a modification to the concept of 'vocabulary' (non-terminal vs. terminal contrast) where it contains context-sensitive phrase structure rules (PS rules). This happens with the insertion of a lexical item into a specific Phrase-marker (P-marker) terminal position. The 'lexical insertion' is no longer at work, with subcategorization features, developed in 1965, of lexicon taking its place. Separating phrase structure grammar (PSG) from the lexicon simplifies PS rules to being a context-free rule (B → D) as opposed to being context sensitive (ABC → ADC).[18]

Context-sensitive phrase-structure (PS) rules ABC → ADC B = single symbol A, C, D = string of symbols (D = non-null, A and C can be null) A and C are non-null when the environment in which B needs to be re-written as D is specified.

Context-free phrase-structure (PS) rules B → D B = single non-terminal symbol D = string of non-terminal symbols (can be non-null) where lexical items can be inserted with their subcategorization features

- Unboundedness and discrete infinity. In principle, language can have a countless amount of words in a sentence. Language is not a continuous notion, but rather discrete in the way that linguistic expressions are distinct units, such as a x word in a sentence, or a x+1, x-l words, and not partial words, x.1, x.2 .... Additionally, language is not constricted in size, but rather infinite. The longest sentence can contain an x number of words. The Standard Theory, created in 1960, includes 'Generalized transformations' to handle discrete infinity cases. It embeds a structure into another structure that is the same type. Nonetheless, this transformation theory was eradicated from the Standard Theory, and transitioned to Phrase Structure Grammar with a minor edit. Self-embedding occurs from either the left or right side of Phrase Structure rules using recursive symbols to allow for non-local recursion.[18]

- Endocentricity and headedness. phrases are constructed based on a head of a phrase The third fundamental property was realized that transformations and phrase structure rules are not enough to capture crucial generalizations of headedness and endocentricity about human language structure. Generally, human language is endocentric around one element central to a phrase, called the 'head' in which the imperative properties of a phrase are determined by it. Revolving around the head, are other elements that allow for the expansion of the structure. In 1968, it was discovered that this fundamental property cannot be captured by Phrase Structure Grammar: this is because phrase structure rules generate structures that are not actually allowed in human languages as they need not have a head; i.e. the can be 'exocentric'. Therefore, another mechanism was introduced to capture this property, which is X-bar theory.[18]

- Local and non-local semantic dependencies. phrases can refer to some earlier step in their phrasal structure The fourth fundamental property touches upon how, within a clause, argument structure is realized in the vicinity of the predicate itself. However, additional semantic features such as scopal features and discourse features can perturb argument structure realization; these are often realized at the edge of an expression, most often a sentence. This interaction of additional semantic features with argument structure requires a device that can relate two non-sister nodes in a sentence to an earlier step in the derivation of a phrasal structure. Withal, referring to the history of the derivation of a phrase structure cannot be conveyed using a context-free phrase structure grammar. Hence the device of 'grammatical transformation', is introduced to manage duality of local and non-local semantic dependencies.[18]

Further developments

Since the publication of bare phrase structure in 1994.,[14] other linguists have continued to build on this theory. In 2002, Chris Collins continued research on Chomsky's proposal to eliminate labels, backing up Chomsky's suggestion of a more simple theory of phrase structure.[20] Collins proposed that economy features, such as Minimality, govern derivations and lead to simpler representations.

In more recent work by John Lowe and John Lundstrand, published in 2020, minimal phrase structure is formulated as an extension to bare phrase structure and X-bar theory. However it does not take adopt all of the assumptions associated with the Minimalist Program (see above). Lowe and Lundstrand argue that any successful phrase structure theory, should include the following seven features:[17]

- avoid non-branching dominance by using as much structure as possible to model constituency

- avoid positing optional phrase structure rules

- avoid redundant labelling to ensure phrases share the category of their heads

- avoid creating a theory that is distinct from X-bar theory

- distinguish Xmax from XP; distinguish the highest projection from Xmax

- account for exocentricity

- account for non-projecting categories

Although Bare Phrase Structure includes many of these features, it does not include all of them, therefore other theories have attempted to incorporate all of these features in order to present a successful phrase structure theory.

Recursion

External and internal Merge

Chomsky (2001) distinguishes between external and internal Merge: if A and B are separate objects then we deal with external Merge; if either of them is part of the other it is internal Merge.[21]

Three controversial aspects of Merge

As it is commonly understood, standard Merge adopts three key assumptions about the nature of syntactic structure and the faculty of language:

- sentence structure is generated bottom-up in the mind of speakers (as opposed to top down or left to right)

- all syntactic structure is binary branching (as opposed to n-ary branching)

- syntactic structure is constituency-based (as opposed to dependency-based).

While these three assumptions are taken for granted for the most part by those working within the broad scope of the Minimalist Program, other theories of syntax reject one or more of them.

Merge is commonly seen as merging smaller constituents to greater constituents until the greatest constituent, the sentence, is reached. This bottom-up view of structure generation is rejected by representational (non-derivational) theories (e.g. Generalized Phrase Structure Grammar, Head-Driven Phrase Structure Grammar, Lexical Functional Grammar, most dependency grammars, etc.), and it is contrary to early work in Transformational Grammar. The phrase structure rules of context free grammar, for instance, were generating sentence structure top down.

The Minimalist view that Merge is strictly binary is justified with the argument that an -ary Merge where would inevitably lead to both under and overgeneration, and as such Merge must be strictly binary.[22] More formally, the forms of undergeneration given in Marcolli et al., (2023) are such that for any -ary Merge with , only strings of length for some can be generated (so sentences like "it rains" cannot be), and further, there are always strings of length that are ambiguous when parsed with binary Merge, for which an -ary merge with would not be able to account for.

Further, -ary Merge where is also said to necessarily lead to overgeneration. If we take a binary tree and an -ary tree with identical sets of leaves, then the binary tree will have a smaller number of accessible pairs of terms compared to the total -tuples of accessible terms in the -ary tree. This is responsible for the generation of ungrammatical sentences like "peanuts monkeys children will throw" (as opposed to "children will throw monkeys peanuts") with a ternary Merge.[23] Despite this, there have also been empirical arguments against strictly binary Merge, such as that coming from constituency tests,[24] and so some theories of grammar such as Head-Driven Phrase Structure Grammar still retain -ary branching in the syntax.

Merge merges two constituents in such a manner that these constituents become sister constituents and are daughters of the newly created mother constituent. This understanding of how structure is generated is constituency-based (as opposed to dependency-based). Dependency grammars (e.g. Meaning-Text Theory, Functional Generative Description, Word grammar) disagree with this aspect of Merge, since they take syntactic structure to be dependency-based.[25]

Comparison to other approaches

In other approaches to generative syntax, such as Head-driven phrase structure grammar, Lexical functional grammar and other types of unification grammar, the analogue to Merge is the unification operation of graph theory. In these theories, operations over attribute-value matrices (feature structures) are used to account for many of the same facts. Though Merge is usually assumed to be unique to language, the linguists Jonah Katz and David Pesetsky have argued that the harmonic structure of tonal music is also a result of the operation Merge.[26]

This notion of 'merge' may in fact be related to Fauconnier's 'blending' notion in cognitive linguistics.

Phrase structure grammar

Phrase structure grammar (PSG) represents immediate constituency relations (i.e. how words group together) as well as linear precedence relations (i.e. how words are ordered). In a PSG, a constituent contains at least one member, but has no upper bound. In contrast, with Merge theory, a constituent contains at most two members. Specifically, in Merge theory, each syntactic object is a constituent.

X-bar theory

X-bar theory is a template that claims that all lexical items project three levels of structure: X, X', and XP. Consequently, there is a three-way distinction between Head, Complement, and Specifier:

- the Head projects its category to each node in the projection;

- the Complement is introduced as sister to the Head, and forms an intermediate projection, labeled X';

- the Specifier is introduced as sister to X', and forms the maximal projection, labeled XP.

While the first application of Merge is equivalent to the Head-Complement relation, the second application of Merge is equivalent to the Specifier-Head relation. However, the two theories differ in the claims they make about the nature of the Specifier-Head-Complement (S-H-C) structure. In X-bar theory, S-H-C is a primitive, an example of this is Kayne's antisymmetry theory. In a Merge theory, S-H-C is derivative.

See also

Notes

- ^ Chomsky (1999).

- ^ Moro, A. (2000). Dynamic Antisymmetry, Linguistic Inquiry Monograph Series 38. MIT Press.).

- ^ Moro, A. (2000). Dynamic Antisymmetry, Linguistic Inquiry Monograph Series 38. MIT Press.).

- ^ Miyagawa, Shigeru (2010). Why Agree? Why Move?. MIT Press.

- ^ Roeper, Tom (2007). The Prism of Grammar: How child language illuminates humanism. MIT Press.).

- ^ Radford, Andrew (1990). Syntactic Theory and the Acquisition of English Syntax. Blackwell.).

- ^ See Adger (2003).

- ^ Lasnik, Howard (1999). "On Feature Strength: Three Minimalist Approaches to Overt Movement". Linguistic Inquiry. 30 (2): 197–217. doi:10.1162/002438999554039. JSTOR 4179059. S2CID 57570833 – via JSTOR.

- ^ Chomsky, Noam (1995). The Minimalist Program. Cambridge MA: MIT Press.

- ^ a b c d Nunes, Jairo (1998). "Bare X-Bar Theory and Structures Formed by Movement". Linguistic Inquiry. 29 (1): 160–168. doi:10.1162/002438998553707. JSTOR 4179012. S2CID 57569962 – via JSTOR.

- ^ a b Manzini, Rita (1995). "From Merge and Move to Form Dependency". Langsci.ucl.ac.uk.

- ^ Cecchetto, Carlo (2015). "Labels from X-Bar Theory to Phrase Structure Theory". (Re)labeling: 23–44. doi:10.7551/mitpress/9780262028721.003.0002. ISBN 978-0-262-02872-1.

- ^ Hornstein, Norbert (2018). "Minimalist Program after 25 Years". Annual Review of Linguistics. 4: 49–65. doi:10.1146/annurev-linguistics-011817-045452.

- ^ a b See Chomsky, Noam. 1995. Bare Phrase Structure. In Evolution and Revolution in Linguistic Theory. Essays in honor of Carlos Otero., eds. Hector Campos and Paula Kempchinsky, 51–109.

- ^ Mathews, P.H (2014). The Concise Oxford Dictionary of Linguistics (3 ed.). Oxford University Press.

- ^ Osborne, Timothy, Michael Putnam, and Thomas Gross 2011. Bare phrase structure, label-less structures, and specifier-less syntax: Is Minimalism becoming a dependency grammar? The Linguistic Review 28: 315–364

- ^ a b Lowe, John; Lovestrand, Joseph (2020-06-29). "Minimal phrase structure: a new formalized theory of phrase structure". Journal of Language Modelling. 8 (1): 1. doi:10.15398/jlm.v8i1.247. ISSN 2299-8470.

- ^ a b c d e Fukui, Naoki (2011). The Oxford Handbook of Linguistic Minimalism. Merge and Bare Phrase Structure. Oxford University Press. pp. 1–24.

- ^ Fukui, Naoki (2017). Merge in the Mind-Brain: Essays on Theoretical Linguistics and the Neuroscience of Language (1 ed.). New York: Routledge. doi:10.4324/9781315442808-2. ISBN 978-1-315-44280-8.

- ^ Chris, Collins (2002). Derivation and Explanation in the Minimalist Program; Eliminating Labels. Blackwell Publishers.

- ^ See Chomsky (2001).

- ^ Marcolli, Matilde; Chomsky, Noam; Berwick, Robert (2023-05-29), Mathematical Structure of Syntactic Merge, arXiv:2305.18278, retrieved 2024-02-17

- ^ Examples taken from M. A. C. Huijbregts, Empirical cases that rule out Ternary Merge, notes, 04/19/2021, used in M. Marcolli, N. Chomsky, and R. Berwick, ‘Mathematical structure of syntactic merge’, 2023, doi: 10.48550/ARXIV.2305.18278.

- ^ Concerning what constituency tests tell us about the nature of branching and syntactic structure, see Osborne (2008: 1126–32).

- ^ Concerning dependency grammars, see Ágel et al. (2003/6).

- ^ See Katz and Pesetsky (2009).

References

- Adger, D. 2003. Core syntax: A Minimalist approach. Oxford: Oxford University Press. ISBN 0-19-924370-0.

- Ágel, V., Ludwig Eichinger, Hans-Werner Eroms, Peter Hellwig, Hans Heringer, and Hennig Lobin (eds.) 2003/6. Dependency and valency: An international handbook of contemporary research. Berlin: Walter de Gruyter.

- Chomsky, N. 1999. Derivation by phase. Cambridge, MA: MIT.

- Chomsky, N. 2001. Beyond explanatory adequacy. Cambridge, MA: MIT.

- Katz, J., D. Pesetsky 2009. The identity thesis for language and music. http://ling.auf.net/lingBuzz/000959

- Kayne, R. 1981. Unambiguous paths. In R. May and J. Koster (eds.), Levels of syntactic representation, 143-183. Dordrecht: Kluwer.

- Kayne, R. 1994. The antisymmetry of syntax. Linguistic Inquiry Monograph Thirty-Eight. MIT Press.

- Moro, A. 2000. Dynamic antisymmetry. Linguistic Inquiry Monograph Twenty-Five. MIT Press.

- Osborne, T. 2008. Major constituents: And two dependency grammar constraints on sharing in coordination. Linguistics 46, 6, 1109–1165

- Radford, Andrew. 2004. Minimalist Syntax: Exploring the Structure of English. Cambridge: Cambridge University Press.