The ceremonial county of Buckinghamshire, which includes the unitary authorities of Buckinghamshire and the City of Milton Keynes, is divided into 7 parliamentary constituencies – 1 borough constituency and 6 county constituencies.

Constituencies

Conservative † Labour ‡ Liberal Democrat ¤ Independent

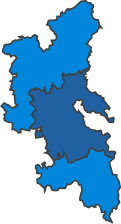

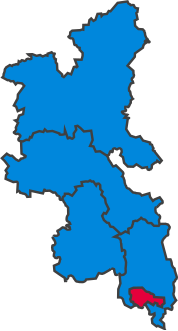

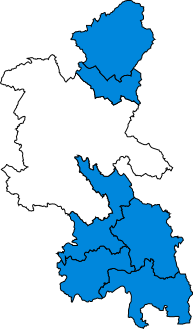

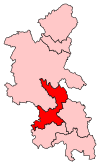

| Constituency[nb 1] | Electorate[1] | Majority[2][nb 2] | Member of Parliament[2] | Nearest opposition[2] | Map | ||

|---|---|---|---|---|---|---|---|

| Aylesbury CC | 86,665 | 17,373 | Rob Butler † | Liz Hind ‡ |  | ||

| Beaconsfield CC | 77,720 | 15,712 | Joy Morrissey † | Dominic Grieve | |||

| Buckingham CC | 83,146 | 20,411 | Greg Smith † | Stephen Dorrell ¤ |  | ||

| Chesham and Amersham CC | 72,542 | 8,028 | Sarah Green ¤ | Peter Fleet † |  | ||

| Milton Keynes North CC | 91,545 | 6,255 | Ben Everitt † | Charlynne Pullen ‡ |  | ||

| Milton Keynes South BC | 96,363 | 6,944 | Iain Stewart † | Hannah O'Neill ‡ |  | ||

| Wycombe CC | 78,093 | 4,214 | Steve Baker † | Khalil Ahmed ‡ |  | ||

2010 boundary changes

Under the Fifth Periodic Review of Westminster constituencies, the Boundary Commission for England[3] decided to retain Buckinghamshire's constituencies for the 2010 election, making minor changes to realign constituency boundaries with the boundaries of current local government wards, and to reduce the electoral disparity between constituencies. The changes included the return of Great Missenden to Chesham and Amersham, Hazlemere to Wycombe and Aston Clinton to Buckingham. In addition, Marlow was transferred from Wycombe to Beaconsfield and Princes Risborough from Aylesbury to Buckingham. The boundary between the two Milton Keynes constituencies was realigned and they were renamed as Milton Keynes North and Milton Keynes South.

| Former name | Boundaries 1997-2010 | Current name | Boundaries 2010–present |

|---|---|---|---|

|

|

|

|

Proposed boundary changes

See 2023 Periodic Review of Westminster constituencies for further details.

Following the abandonment of the Sixth Periodic Review (the 2018 review), the Boundary Commission for England formally launched the 2023 Review on 5 January 2021.[4] Initial proposals were published on 8 June 2021 and, following two periods of public consultation, revised proposals were published on 8 November 2022. The final proposals were published on 28 June 2023.

The commission has proposed that the number of seats in the combined area of Buckinghamshire and Milton Keynes be increased from seven to eight with the creation of a new constituency named Mid Buckinghamshire. This leads to significant changes elsewhere, particularly in Milton Keynes, with the creation of a cross-authority constituency named Buckingham and Bletchley, replacing the existing Buckingham seat.[5][6]

The following constituencies are proposed:

Containing electoral wards from Buckinghamshire (unitary authority)

- Aylesbury

- Beaconsfield

- Buckingham and Bletchley (part)

- Chesham and Amersham

- Mid Buckinghamshire

- Wycombe

Containing electoral wards from Milton Keynes

- Buckingham and Bletchley (part)

- Milton Keynes Central

- Milton Keynes North

Results history

Primary data source: House of Commons research briefing - General election results from 1918 to 2019[7]

2019

The number of votes cast for each political party who fielded candidates in constituencies comprising Buckinghamshire in the 2019 general election were as follows:

| Party | Votes | % | Change from 2017 | Seats | Change from 2017 |

|---|---|---|---|---|---|

| Conservative | 220,814 | 52.7% | 7 | ||

| Labour | 106,226 | 25.4% | 0 | 0 | |

| Liberal Democrats | 57,554 | 13.7% | 0 | 0 | |

| Greens | 12,349 | 2.9% | 0 | 0 | |

| Brexit | 1,286 | 0.3% | new | 0 | 0 |

| Others | 20,664 | 5.0% | 0 | ||

| Total | 418,893 | 100.0 | 7 |

Percentage votes

Note that before 1983 Buckinghamshire included the Eton and Slough areas of what is now Berkshire.

| Election year | 1922 | 1923 | 1924 | 1929 | 1931 | 1935 | 1945 | 1950 | 1951 | 1955 | 1959 | 1964 | 1966 | 1970 | 1974 (F) | 1974 (O) | 1979 | 1983 | 1987 | 1992 | 1997 | 2001 | 2005 | 2010 | 2015 | 2017 | 2019 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Conservative | 50.2 | 47.0 | 54.3 | 47.1 | 72.3 | 60.6 | 43.4 | 45.2 | 54.3 | 53.9 | 52.5 | 48.8 | 47.1 | 52.5 | 44.3 | 44.4 | 55.0 | 56.8 | 57.0 | 57.0 | 43.7 | 45.1 | 47.8 | 44.3 | 45.5 | 47.0 | 52.7 |

| Labour | 13.8 | 19.6 | 16.3 | 19.7 | 20.9 | 29.1 | 43.8 | 39.7 | 45.7 | 40.4 | 35.4 | 36.0 | 39.7 | 35.9 | 29.7 | 32.0 | 27.4 | 14.4 | 15.5 | 19.2 | 30.6 | 30.9 | 25.9 | 15.5 | 18.1 | 29.3 | 25.4 |

| Liberal Democrat1 | 36.1 | 33.4 | 29.4 | 33.1 | 6.8 | 10.3 | 12.7 | 14.7 | - | 5.7 | 12.1 | 15.2 | 13.2 | 11.7 | 25.4 | 22.5 | 15.9 | 28.5 | 27.0 | 22.1 | 21.2 | 19.9 | 21.2 | 20.9 | 6.5 | 6.4 | 13.7 |

| Green Party | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | * | * | * | * | * | 0.8 | 5.7 | 4.0 | 2.9 |

| UKIP | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | * | * | * | 6.2 | 14.9 | 3.2 | * |

| Brexit Party | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | 0.3 |

| The Speaker2 | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | - | 6.3 | 9.0 | 8.5 | - |

| Other | - | - | - | - | - | - | - | 0.4 | - | - | - | - | - | - | 0.6 | 1.1 | 1.7 | 0.4 | 0.5 | 1.8 | 4.5 | 4.0 | 5.0 | 5.9 | 0.3 | 1.6 | 5.0 |

1pre-1979: Liberal Party; 1983 & 1987 - SDP-Liberal Alliance

2Standing in Buckingham, unopposed by the 3 main parties.

* Included in Other

Accurate vote percentages for the 1918 election cannot be obtained because some candidates stood unopposed.

Seats

| Election year | 1983 | 1987 | 1992 | 1997 | 2001 | 2005 | 2010 | 2015 | 2017 | 2019 |

|---|---|---|---|---|---|---|---|---|---|---|

| Conservative | 6 | 6 | 7 | 5 | 5 | 6 | 6 | 6 | 6 | 7 |

| Labour | 0 | 0 | 0 | 2 | 2 | 1 | 0 | 0 | 0 | 0 |

| The Speaker1 | - | - | - | - | - | - | 1 | 1 | 1 | - |

| Total | 6 | 6 | 7 | 7 | 7 | 7 | 7 | 7 | 7 | 7 |

Maps

1885-1910

-

1885

-

1886

-

1892

-

1895

-

1900

-

1906

-

Jan 1910

-

Dec 1910

1918-1945

-

1918

-

1922

-

1923

-

1924

-

1929

-

1931

-

1935

-

1945

1950-1979

-

1950

-

1951

-

1955

-

1959

-

1964

-

1966

-

1970

-

Feb 1974

-

Oct 1974

-

1979

1983-present

-

1983

-

1987

-

1992

-

1997

-

2001

-

2005

-

2010

-

2015

-

2017

-

2019

-

2021

Historical representation by party

A cell marked → (with a different colour background to the preceding cell) indicates that the previous MP continued to sit under a new party name.

1885 to 1945

Conservative Liberal Liberal Unionist

| Constituency | 1885 | 1886 | 89 | 91 | 1892 | 1895 | 99 | 1900 | 1906 | Jan 10 | Dec 10 | 12 | 14 | 1918 | 1922 | 1923 | 1924 | 1929 | 1931 | 1935 | 37 | 38 | 43 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Aylesbury | F. de Rothschild | → | W. de Rothschild | L. de Rothschild | → | Keens | Burgoyne | Beaumont | Reed | ||||||||||||||

| Buckingham | E. Verney | Hubbard | E. Verney | Leon | Carlile | F. Verney | H. Verney | Bowyer | Whiteley | Berry | |||||||||||||

| Wycombe | Curzon | Grenfell | Herbert | Cripps | du Pré | Woodhouse | Knox | ||||||||||||||||

1945 to 1983

| Constituency | 1945 | 1950 | 1951 | 52 | 1955 | 1959 | 1964 | 1966 | 1970 | Feb 1974 | Oct 1974 | 78 | 1979 | 82 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Eton and Slough | Levy | Brockway | Meyer | Lestor | ||||||||||

| Aylesbury | Reed | Summers | Raison | |||||||||||

| Buckingham | Crawley | Markham | Maxwell | Benyon | ||||||||||

| Wycombe | Haire | Astor | Hall | Whitney | ||||||||||

| Buckinghamshire South / Beaconsfield (1974) | Bell | Smith | ||||||||||||

| Chesham and Amersham | Gilmour | |||||||||||||

1983 to present

Conservative Independent Labour Speaker Liberal Democrats

| Constituency | 1983 | 1987 | 1992 | 1997 | 2001 | 2005 | 09 | 2010 | 2015 | 2017 | 19 | 2019 | 21 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Aylesbury | Raison | Lidington | Butler | ||||||||||

| Buckingham | Walden | Bercow | → | G. Smith | |||||||||

| Wycombe | Whitney | Goodman | Baker | ||||||||||

| Beaconsfield | T. Smith | Grieve | → | Morrissey | |||||||||

| Chesham and Amersham | Gilmour | Gillan | Green | ||||||||||

| Milton Keynes / NE Milton Keynes (1992) / MK North (2010) | Benyon | Butler | White | Lancaster | Everitt | ||||||||

| Milton Keynes SW / Milton Keynes S (2010) | Legg | Starkey | Stewart | ||||||||||

See also

- List of parliamentary constituencies in the South East (region)

- History of parliamentary constituencies and boundaries in Buckinghamshire

Notes

References

- ^ Baker, Carl; Uberoi, Elise; Cracknell, Richard (28 January 2020). "General Election 2019: full results and analysis".

{{cite journal}}: Cite journal requires|journal=(help) - ^ a b c "Constituencies A-Z - Election 2019". BBC News. Retrieved 24 April 2020.

- ^ "The Parliamentary Constituencies (England) Order 2007". legislation.gov.uk. Retrieved 27 May 2020.

- ^ "2023 Review | Boundary Commission for England". boundarycommissionforengland.independent.gov.uk. Retrieved 7 October 2021.

- ^ Ryder, Liam (23 November 2022). "Maps show huge changes proposed to Bucks' boundaries". buckinghamshirelive. Retrieved 13 December 2022.

- ^ "The 2023 Review of Parliamentary Constituency Boundaries in England – Volume one: Report | Boundary Commission for England". boundarycommissionforengland.independent.gov.uk. paras 941-967. Retrieved 10 July 2023.

- ^ Watson, Christopher; Uberoi, Elise; Loft, Philip (17 April 2020). "General election results from 1918 to 2019".

{{cite journal}}: Cite journal requires|journal=(help)