| Part of a series on |

| Regression analysis |

|---|

| Models |

| Estimation |

| Background |

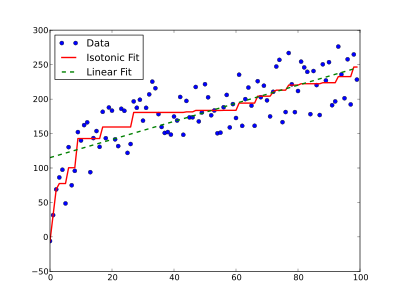

In statistics and numerical analysis, isotonic regression or monotonic regression is the technique of fitting a free-form line to a sequence of observations such that the fitted line is non-decreasing (or non-increasing) everywhere, and lies as close to the observations as possible.

YouTube Encyclopedic

-

1/5Views:4 3294 19627 0257663 050

-

38 - Isotonic Regression

-

Machine Learning | Isotonic Regression

-

Probability Calibration : Data Science Concepts

-

Uncoupled isotonic regression - Jonathan Niles-Weed

-

Probability Calibration Workshop - Lesson 2

Transcription

Applications

Isotonic regression has applications in statistical inference. For example, one might use it to fit an isotonic curve to the means of some set of experimental results when an increase in those means according to some particular ordering is expected. A benefit of isotonic regression is that it is not constrained by any functional form, such as the linearity imposed by linear regression, as long as the function is monotonic increasing.

Another application is nonmetric multidimensional scaling,[1] where a low-dimensional embedding for data points is sought such that order of distances between points in the embedding matches order of dissimilarity between points. Isotonic regression is used iteratively to fit ideal distances to preserve relative dissimilarity order.

Isotonic regression is also used in probabilistic classification to calibrate the predicted probabilities of supervised machine learning models.[2]

Isotonic regression for the simply ordered case with univariate has been applied to estimating continuous dose-response relationships in fields such as anesthesiology and toxicology. Narrowly speaking, isotonic regression only provides point estimates at observed values of Estimation of the complete dose-response curve without any additional assumptions is usually done via linear interpolation between the point estimates. [3]

Software for computing isotone (monotonic) regression has been developed for R,[4][5][6] Stata, and Python.[7]

Problem statement and algorithms

Let be a given set of observations, where the and the fall in some partially ordered set. For generality, each observation may be given a weight , although commonly for all .

Isotonic regression seeks a weighted least-squares fit for all , subject to the constraint that whenever . This gives the following quadratic program (QP) in the variables :

- subject to

where specifies the partial ordering of the observed inputs (and may be regarded as the set of edges of some directed acyclic graph (dag) with vertices ). Problems of this form may be solved by generic quadratic programming techniques.

In the usual setting where the values fall in a totally ordered set such as , we may assume WLOG that the observations have been sorted so that , and take . In this case, a simple iterative algorithm for solving the quadratic program is the pool adjacent violators algorithm. Conversely, Best and Chakravarti[8] studied the problem as an active set identification problem, and proposed a primal algorithm. These two algorithms can be seen as each other's dual, and both have a computational complexity of on already sorted data.[8]

To complete the isotonic regression task, we may then choose any non-decreasing function such that for all i. Any such function obviously solves

- subject to being nondecreasing

and can be used to predict the values for new values of . A common choice when would be to interpolate linearly between the points , as illustrated in the figure, yielding a continuous piecewise linear function:

Centered isotonic regression

As this article's first figure shows, in the presence of monotonicity violations the resulting interpolated curve will have flat (constant) intervals. In dose-response applications it is usually known that is not only monotone but also smooth. The flat intervals are incompatible with 's assumed shape, and can be shown to be biased. A simple improvement for such applications, named centered isotonic regression (CIR), was developed by Oron and Flournoy and shown to substantially reduce estimation error for both dose-response and dose-finding applications.[9] Both CIR and the standard isotonic regression for the univariate, simply ordered case, are implemented in the R package "cir".[4] This package also provides analytical confidence-interval estimates.

References

- ^ Kruskal, J. B. (1964). "Nonmetric Multidimensional Scaling: A numerical method". Psychometrika. 29 (2): 115–129. doi:10.1007/BF02289694. S2CID 11709679.

- ^ "Predicting good probabilities with supervised learning | Proceedings of the 22nd international conference on Machine learning". dl.acm.org. doi:10.1145/1102351.1102430. S2CID 207158152. Retrieved 2020-07-07.

- ^ Stylianou, MP; Flournoy, N (2002). "Dose finding using the biased coin up-and-down design and isotonic regression". Biometrics. 58 (1): 171–177. doi:10.1111/j.0006-341x.2002.00171.x. PMID 11890313. S2CID 8743090.

- ^ a b Oron, Assaf. "Package 'cir'". CRAN. R Foundation for Statistical Computing. Retrieved 26 December 2020.

- ^ Leeuw, Jan de; Hornik, Kurt; Mair, Patrick (2009). "Isotone Optimization in R: Pool-Adjacent-Violators Algorithm (PAVA) and Active Set Methods". Journal of Statistical Software. 32 (5): 1–24. doi:10.18637/jss.v032.i05. ISSN 1548-7660.

- ^ Xu, Zhipeng; Sun, Chenkai; Karunakaran, Aman. "Package UniIsoRegression" (PDF). CRAN. R Foundation for Statistical Computing. Retrieved 29 October 2021.

- ^ Pedregosa, Fabian; et al. (2011). "Scikit-learn:Machine learning in Python". Journal of Machine Learning Research. 12: 2825–2830. arXiv:1201.0490. Bibcode:2011JMLR...12.2825P.

- ^ a b Best, Michael J.; Chakravarti, Nilotpal (1990). "Active set algorithms for isotonic regression; A unifying framework". Mathematical Programming. 47 (1–3): 425–439. doi:10.1007/bf01580873. ISSN 0025-5610. S2CID 31879613.

- ^ Oron, AP; Flournoy, N (2017). "Centered Isotonic Regression: Point and Interval Estimation for Dose-Response Studies". Statistics in Biopharmaceutical Research. 9 (3): 258–267. arXiv:1701.05964. doi:10.1080/19466315.2017.1286256. S2CID 88521189.

Further reading

- Robertson, T.; Wright, F. T.; Dykstra, R. L. (1988). Order restricted statistical inference. New York: Wiley. ISBN 978-0-471-91787-8.

- Barlow, R. E.; Bartholomew, D. J.; Bremner, J. M.; Brunk, H. D. (1972). Statistical inference under order restrictions; the theory and application of isotonic regression. New York: Wiley. ISBN 978-0-471-04970-8.

- Shively, T.S., Sager, T.W., Walker, S.G. (2009). "A Bayesian approach to non-parametric monotone function estimation". Journal of the Royal Statistical Society, Series B. 71 (1): 159–175. CiteSeerX 10.1.1.338.3846. doi:10.1111/j.1467-9868.2008.00677.x. S2CID 119761196.

{{cite journal}}: CS1 maint: multiple names: authors list (link) - Wu, W. B.; Woodroofe, M.; Mentz, G. (2001). "Isotonic regression: Another look at the changepoint problem". Biometrika. 88 (3): 793–804. doi:10.1093/biomet/88.3.793.