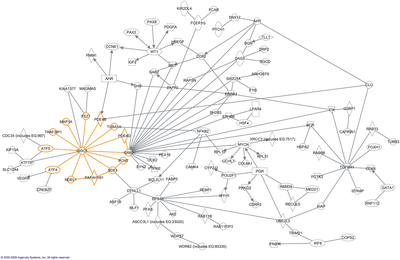

In molecular biology, an interactome is the whole set of molecular interactions in a particular cell. The term specifically refers to physical interactions among molecules (such as those among proteins, also known as protein–protein interactions, PPIs; or between small molecules and proteins[1]) but can also describe sets of indirect interactions among genes (genetic interactions).

The word "interactome" was originally coined in 1999 by a group of French scientists headed by Bernard Jacq.[3] Mathematically, interactomes are generally displayed as graphs. Though interactomes may be described as biological networks, they should not be confused with other networks such as neural networks or food webs.

YouTube Encyclopedic

-

1/5Views:14 72956 7921 72216 3008 062

-

16. Protein Interaction Networks

-

Protein protein interaction

-

Protein Protein Interaction Network- PART 1 | History of Graph Theory

-

Brief Introduction of Protein-Protein Interactions (PPIs)

-

Methods to detect protein-protein interactions (PPIs)

Transcription